Questions Regarding Power Cable ABX Test

Comments

-

OK, the initial post raises some valid points, nearly all of which I agree with. The shroud appears to not be pulled out as far in some of the pics, so I think that was done to photograph the gear. Having some absorption on the front wall between the speakers can be beneficial in many rooms, but any statement on its impact here is pure conjecture.

I will say your points are somewhat undermined by HI. He's half-deaf in one ear and can still hear cable differences. Seriously, dude is permanently off-axis, so the guys next to blue-shirt aren't that bad off in comparison.

However, your cursory review of the literature is a complete misstatement. Psychologists run blinded tests. A psychologist INVENTED the blinded test. Peer review of psychological publications pretty much demands blinding or a reasonable explanation of why it was not done. The two books you cite are their own special branch of research (which I really wouldn't generalize to "psychology"), but even they take it as a given that the tests you're running are blinded. I'm pretty sure that the first even explicitly describes the ABX. In fact, if your "literature review" failed to find double blinds in the abstracts, it's because the authors didn't think it was worth mentioning, whereas if it was unblinded, it's the first thing they'd mention.

I'm currently doing analysis on 3 product preference studies. All blinded, despite the fact that it's a total pain and this particular product is heavily reliant on its packaging for recognition.DarqueKnight wrote: »Please be patient. You know it takes time to search Google, Roger Russel and the Audioholics archives for the appropriate response.

Wow, after all that talk about keeping things civil, you're trolling your own thread?Gallo Ref 3.1 : Bryston 4b SST : Musical fidelity CD Pre : VPI HW-19

Gallo Ref AV, Frankengallo Ref 3, LC60i : Bryston 9b SST : Meridian 565

Jordan JX92s : MF X-T100 : Xray v8

Backburner:Krell KAV-300i -

duplicat post

-

I will say your points are somewhat undermined by HI. He's half-deaf in one ear and can still hear cable differences. Seriously, dude is permanently off-axis, so the guys next to blue-shirt aren't that bad off in comparison.

While it is true that I have hearing loss in one of my ears, I've had it all my life. I've been critically listening to music for 40 years. My mind and my ears are accustomed to hearing things one way and thus have adjusted. I don't know any different than what I've heard all my life but my mind and ears have been trained in the same manner that a normal hearing audiophile's ears and brain have. The miracle of the human body being able to intelligently adjusting to changes in the body or birth defects is a fact.

That said, the guys sitting next to, above, or behind the "blue-shirt", assuming they all have normal hearing, would be at a great disadvantage to being seated off-axis. It can't possibly be an accurate test if they are not in the sweet spot. IMHO, the changes in sound from the sweet spot to the off-axis fellas, would hinder their ability to hear subtle changes especially those of the most times subtle changes in the sound of power cords.

I totally disagree with your point above. -

hearingimpared wrote: »I totally disagree with your point above.

Fair enough. I can't see for ****, which I think has enhanced my ability to make out blurry text, since I do it all the time.

However, the more general point that has been made time & again by DQ is that cable differences are important to the degree that they would be noticeable even off-axis. Or put differently, can you hear cable differences a few feet off-axis in your own rig?Gallo Ref 3.1 : Bryston 4b SST : Musical fidelity CD Pre : VPI HW-19

Gallo Ref AV, Frankengallo Ref 3, LC60i : Bryston 9b SST : Meridian 565

Jordan JX92s : MF X-T100 : Xray v8

Backburner:Krell KAV-300i -

Wow, after all that talk about keeping things civil, you're trolling your own thread?

Not true at all. In all of the cable debate threads here, the naysayers simply post up old test links, one-sided opinions by others, etc. This is a known FACT as the naysayers are constantly asked what THEIR experience is with testing different ICs & speaker cables and associated gear and all but one that I know of have not or can not or will not respond to that question.

So, I think what Ray posted is fairly accurate based on past experience with these various threads, that is an observation, not trolling. -

Fair enough. I can't see for ****, which I think has enhanced my ability to make out blurry text, since I do it all the time.

However, the more general point that has been made time & again by DQ is that cable differences are important to the degree that they would be noticeable even off-axis. Or put differently, can you hear cable differences a few feet off-axis in your own rig?

I don't recall DK making any such statement but I'll take your word for it, he can chime in to verify.

As far as the bolded portion of your post, to be fair and honest, I've never tried to listen to cable changes off-axis as my sweet spot while deep is rather narrow, so I can't honestly answer your question. -

However, the more general point that has been made time & again by DQ is that cable differences are important to the degree that they would be noticeable even off-axis. Or put differently, can you hear cable differences a few feet off-axis in your own rig?

Really? (I assume by "DQ" you mean me.:)) Where exactly did I say, or even infer that as a general point? Certainly not in this thread. Here is a direct quote from the first post in this thread:DarqueKnight wrote: »I get a wide, deep, holographic sound stage from my two channel audio system when I am seated in the stereophonic sweet spot. However,

1. If I stand up at the "sweet spot" listening position, I lose some audio details.

2. If I stand or sit along the wall behind the "sweet spot" listening position, I lose some audio details.

3. If I sit or stand to the far left or far right of the "sweet spot" listening position, I lose A LOT of audio details.

4. Is someone is seated next to me while I am in the "sweet spot" listening position, I lose a little or a lot of details, depending on the size of the person. A person sitting next to me also diminishes the tactile sensations coming from the sound stage.

If you read my cable reviews, you will find that my results have been variable. Sometimes I heard a difference and sometimes I did not. Sometimes I heard a big difference and sometimes I heard a little difference. Sometimes differences, if they were huge, were discernable off axis, even from another room, but this was the exception rather than the general rule. You will also find that, in cases where I did not hear a difference, I was thrilled to return the cable and get cash back.;)However, your cursory review of the literature is a complete misstatement. Psychologists run blinded tests. A psychologist INVENTED the blinded test.

What I actually said was:DarqueKnight wrote: »It is interesting to note that the psychological literature does not seem to support the use of blind tests as a valid evaluative tool for sensory stimuli.

Therefore, if you can point to some peer-reviewed scientific literature that supports the use of blind tests, and particularly ABX or DBX tests, for sensory stimuli, I would appreciate it.Peer review of psychological publications pretty much demands blinding or a reasonable explanation of why it was not done. The two books you cite are their own special branch of research (which I really wouldn't generalize to "psychology"), but even they take it as a given that the tests you're running are blinded. I'm pretty sure that the first even explicitly describes the ABX.

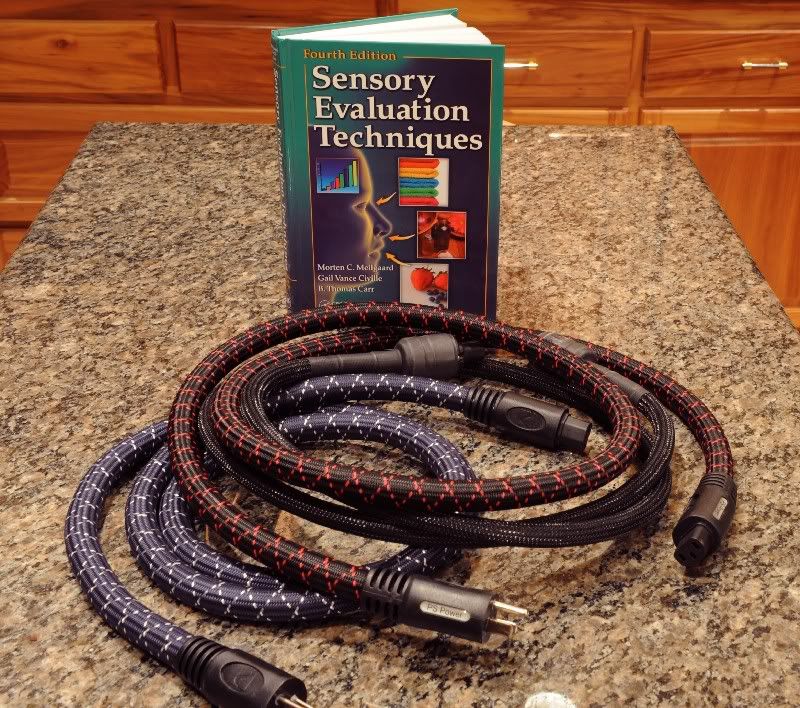

Speculating much? I have attached a brochure for the "Sensory Evaluation Techniques" book that describes the contents in detail. The two books I mentioned are not psychology texts, but they describe tests used by psychologists in evaluating sensory stimuli. In fact, "Sensory Evaluation Techniques" is widely regarded as the standard guide manual, across many scientific fields (psychology, food science, engineering, etc.), for conducting evaluative trials for sensory stimuli.

You can go to Amazon.com and read the index for the "Sensory Evaluation Techniques" book. The terms "ABX", or "blind" do not appear in the index, nor do they appear in the attached brochure. The index does mention two tests, duo-trio and triangle, which are somewhat related to ABX.

I have ordered the "Sensory Evaluation Techniques" book and the "Sensory Testing Methods" manual which is published by the American Society for Testing and Materials. I will post a thorough review of each after I have read them.

So far, I have learned that ABX, duo-trio and triangle tests are forms of discrimination tests. For any discrimination test to be scientifically valid, the evaluation panel must consist of trained evaluators. The evaluators do not necessarily have to be experts in the stimuli they are evaluating, but they must be trained in the interpretation of such stimuli. Questionnaires must be given to assess the interpretive skill of the evaluators.In fact, if your "literature review" failed to find double blinds in the abstracts, it's because the authors didn't think it was worth mentioning, whereas if it was unblinded, it's the first thing they'd mention.

Let me make sure I understand your point: The author of a peer-reviewed scientific paper would not mention a test used in the paper because they didn't think it was worth mentioning. That does not seem very "scientific" to me. Please point us to some peer reviewed and scientifically accepted documentation that supports this practice.

I am in agreement with Joe's (hearingimpared's) reply to your comments about his hearing ability and my alleged trolling, therefore I have nothing to add to his points.Proud and loyal citizen of the Digital Domain and Solid State Country! -

Dude, I do this for a living. I've read big chunks of the books in question. I'd send a grad student out to fetch them, but they're on spring break.

But, "Consumer Sensory Testing For Product Development" is on google books; page one:

"The big difference between consumer sensory and market research testing is that the sensory test is generally conducted with coded, unbranded products, whereas market research is most frequently done on branded products." It goes on to explain the reason for this (think Bose), blah, blah, blah.

Please. Read these books before you say what's in them.

Also, please go read some journal articles- you work at a university, no? You should be able to access full text. A lot of the time details of blinding, etc don't appear in the abstracts, simply because it's pretty boring and not worth wasting the limited text on- sometimes they don't even go in the full text due to space constraints, you just reference a prior publication.

Anyhow, I've got a busy day, but if I get the chance, I'll run a pubmed search and get you a list of articles a mile long that are double blinded sensory data. There's probably even some ICH guidelines that cover it.Gallo Ref 3.1 : Bryston 4b SST : Musical fidelity CD Pre : VPI HW-19

Gallo Ref AV, Frankengallo Ref 3, LC60i : Bryston 9b SST : Meridian 565

Jordan JX92s : MF X-T100 : Xray v8

Backburner:Krell KAV-300i -

...and come to think of it, duo-trio and triangle tests ARE types of blinded tests. Where are you reading about these?Gallo Ref 3.1 : Bryston 4b SST : Musical fidelity CD Pre : VPI HW-19

Gallo Ref AV, Frankengallo Ref 3, LC60i : Bryston 9b SST : Meridian 565

Jordan JX92s : MF X-T100 : Xray v8

Backburner:Krell KAV-300i -

When I think I hear something new with a different cable I often have to go back to see if it sounds the same with the old cable. Sometimes it does, sometimes not. I always have to wonder though, if it sounds the same with the two cables then why did it catch my attention this time but never before?? I'm guessing that something else changed.

madmaxVinyl, the final frontier...

Avantgarde horns, 300b tubes, thats the kinda crap I want...

-

When I think I hear something new with a different cable I often have to go back to see if it sounds the same with the old cable. Sometimes it does, sometimes not. I always have to wonder though, if it sounds the same with the two cables then why did it catch my attention this time but never before?? I'm guessing that something else changed.

madmax

It's called expectation bias(?). Next time you get some new 'whatevers' have some one switch while you are out of the room. See what you think then.

I have some stuff coming from JPS Labs and Signal. I have a buddy coming over to switch the cable.

My hope is that a positive difference will be made. My bias I want a tight reign on and having him switch is about the only way I know to accomplish that. -

If buying new cables and expecting them to sound better is supposed to be

key to them sounding better, then why did my old cables win?

I guess my brain and my wallet are in a no holds barred death match,

and the brain lost."The legitimate powers of government extend to such acts only as are injurious to others. But it does me no injury for my neighbour to say there are twenty gods, or no god. It neither picks my pocket nor breaks my leg." --Thomas Jefferson -

It's called expectation bias(?). Next time you get some new 'whatevers' have some one switch while you are out of the room. See what you think then.

I have some stuff coming from JPS Labs and Signal. I have a buddy coming over to switch the cable.

My hope is that a positive difference will be made. My bias I want a tight reign on and having him switch is about the only way I know to accomplish that.

My bias when considering and testing a new item is to SAVE MONEY. That in itself causes me to be more critical of the new gear and I always give each piece a long listen to as stated above. I've have to admit being excited about auditioning a new piece of gear, regardless of price only to be disappointed in it's performance.

See my post above about the VTL 5.5 preamp. I was as you put it expecting it to sound stellar especially when I took into consideration the rave reviews and recommendations of my friends, I was greatly disappointed in the sound and was relieved to get more than I paid for it when I sold it. -

Dude, I do this for a living. I've read big chunks of the books in question. I'd send a grad student out to fetch them, but they're on spring break.Anyhow, I've got a busy day, but if I get the chance, I'll run a pubmed search and get you a list of articles a mile long that are double blinded sensory data. There's probably even some ICH guidelines that cover it.

Thank you for offering to help. I'm sure that with an expert in the field, such as yourself, assisting with this study, we will soon come to a correct understanding.

I am looking forward to receiving the two books I mentioned a few posts back.Proud and loyal citizen of the Digital Domain and Solid State Country! -

Good evening audiophile brethren. Now, I have some follow up regarding my inquiry into ABX testing for power cables. Today, my textbook "Sensory Evaluation Techniques" (hereafter referred to as "SET") arrived. The principal author of SET was the late Dr. Morten C. Meilgaard, who was a world renowned pioneer and the foremost expert in the field of sensory science. SET is the educational standard in the field of sensory science.

My power cables liked this book.

You may recall that I was having trouble finding references on blind tests and particularly on "ABX" tests in the scholarly psychological literature. I thought perhaps ABX might be called something else by professionals in the field. I did an Internet search on alternative names for ABX tests. What I found was that the professional term for ABX test is "duo-trio balanced reference mode test". This test is covered on pages 72-80 of SET. That is the good news.

The bad news is that the duo-trio balanced mode (ABX) test does not appear to be a good test for audio gear. I, and others, had always held this position from a common sense and intuitive perspective. However, up to now, I had never sought any scholarly references on the subject. I really didn't care...until recently.

From pages 72-73 of SET:

"Duo-Trio Test

Scope and Application

The duo-trio test (ISO 2004a) is statistically less efficient than the triangle test because the chance of obtaining a correct result by guessing is 1 in 2. On the other hand, the test is simple and easily understood.

Use this method when the test objective is to determine whether a sensory difference exists between two samples. This method is particularly useful in situations

1. To determine whether product differences result from a change in ingredients, processing, packaging, or storage.

2. To determine whether an overall difference exists, where no specific attributes can be identified as having been affected.

The duo-trio test has general application whenever more than 15, and preferably more than 30, test subjects are available. As a general rule, the minimum is 16 subjects, but for less than 28, the beta error is high. Discrimination is much improved if 32, 40, or a larger number [of subjects] can be employed."

[Beta error is a statistical error in testing when it is concluded that something is negative when it is actually positive. Beta error is often referred to as "false negative".]

"Two forms of the test exist: the constant reference mode, in which the same sample, usually drawn from regular production, is always the reference, and the balanced reference mode [or ABX], in which both of the samples being compared are used at random as the reference.

Use the constant reference mode with trained subjects whenever a product well known to them can be used as the reference.

Use the balanced reference [ABX] mode if both samples are unknown or if untrained subjects are used."

In summary, for scientifically valid results:

1. An ABX (duo-trio balanced mode) test generally requires a subject population of at least 16 persons. Optimum subject population is at least 32 or more persons.

2. An ABX (duo-trio balanced mode) test which employs a subject size of less than 28 persons generates high rates of beta error (false negatives or "no differences between samples") in the results.

3. An ABX (duo-trio balanced mode) test must compare samples which are unknown (unfamiliar) to the test subjects.

4. An ABX (duo-trio balanced mode) test can use subjects that are untrained.

Criteria #3 and #4 are particularly troublesome as they allow subjects that are both untrained and unfamiliar with the product samples. Is it wise to accept advice from people unfamiliar with and untrained in the thing they are offering advice on? Is this good science? I don't think so.

Another troublesome point is that the ABX test is statistically inefficient because the chance of guessing a right answer is 50%.

Further Study - I've Been Getting Help

Every bit of scholarly writing I have collected so far, from highly credible sources, indicates that ABX testing is not scientifically valid for the evaluation of audio equipment. So, what does constitute a valid evaluation methodology for audio equipment?

During my frustrations at not being able to find reference material on ABX or "blind" testing for audio stimuli, I sent emails to the chairpersons of psychology departments at three universities inquiring about a scientifically appropriate and accurate evaluation methodology for audible differences in electronic music reproduction equipment. I received prompt responses with contact information for experts inside and outside of their respective departments. I was further informed that my study falls within the realm of sensory science rather than psychology. Sensory science is a cross-disciplinary field comprising knowledge from many fields (chemistry, physics, engineering, statistics, psychology, etc.).

One thing I was very surprised to learn was that the ABX test, which is designed to inhibit or remove observer bias, can actually generate a more dangerous form of bias: guessing.

Guessing bias results from the fact that people either consciously or subconsciously know that they are being "tricked" in some way by an ABX test. No one likes to be tricked. No one likes to be fooled. No one likes to be wrong. Therefore, some, perhaps many, subjects participating in an ABX test, rather than focus on the attributes of the two samples, will focus on trying to guess the correct answer. This is particularly true when the test subjects are untrained.

So, what is a scientifically valid and accurate evaluation methodology for audio equipment? I'm still in the early phase of my research. However, I will tell you that everything I have read and been told so far indicates that the subjectivists have been doing it right.;)

More later. You know I don't like it when you post these kinds of threads.

You know I don't like it when you post these kinds of threads.

Is that so? Well, as my mom used to tell me when I was a little devil, "this may not be good to you, but it's good for you".~DKProud and loyal citizen of the Digital Domain and Solid State Country! -

While admirable from a research perspective, as a consumer I don’t see the point of this – if I buy a product that claims an unmistakable audible benefit then that is what I would expect to hear. I shouldn’t need to worry about sound waves bouncing off someone’s head and collapsing my stereophonic sound field. I shouldn’t need training to hear the differences, they should be there for all to hear…

Go to any random cable site and you’ll see testimonials:

"The difference is immediately noticeable and astounding.... even more noticeable on speakers than on [stereo buss processors]. Anywhere you put them, it helps. Power cords are the filters of the future.”

“What can be expected from a Kaptovator is amazing realism to music from the highest highs to the lowest lows. THE KAPTOVATOR is honestly one of the best upgrades you can make to any great system component”

Also in regards to your concerns about the testing methodology, if there were issues with the setups should it not still be a level playing field? Meaning if my listening area is set up poorly (it probably is) then shouldn’t the power cable help improve it?

Keep in mind I’m not ‘dissing’ your pursuit here, just wondering why something as easy as ‘does it sound better’ can get so complex. -

Raife, I don't see an obligitory arrow to the 'ears' on the cover, just eyes, nose, mouth.

And don't claim SET, single ended triode is what that means to 'us', get a new moniker, at least for consistency.just wondering why something as easy as ‘does it sound better’ can get so complex.

And don't claim SET, single ended triode is what that means to 'us', get a new moniker, at least for consistency.just wondering why something as easy as ‘does it sound better’ can get so complex.

It's easy to make a mountain out of a molehill, when people forget (or never realize to begin with) the simple objective of the hobby.

<again, in this order>

It's:

1. Listening to Music.

2. On the Hifi.

Pretty simple stuff (and fun) at the end of the day.

Cheers,

RussCheck your lips at the door woman. Shake your hips like battleships. Yeah, all the white girls trip when I sing at Sunday service. -

I shouldnt need training to hear the differences, they should be there for all to hear

Folks who take a serious interest in audio reproduction do learn how to listen and can tell when there is just a small difference. However, not everyone hears the same and what I hear as a difference, you may not. And then you've got folks that couldn't tell the difference between a boom box and a top notch rig.Political Correctness'.........defined

"A doctrine fostered by a delusional, illogical minority and rabidly promoted by an unscrupulous mainstream media, which holds forth the proposition that it is entirely possible to pick up a t-u-r-d by the clean end."

President of Club Polk -

While admirable from a research perspective, as a consumer I don’t see the point of this – if I buy a product that claims an unmistakable audible benefit then that is what I would expect to hear. I shouldn’t need to worry about sound waves bouncing off someone’s head and collapsing my stereophonic sound field. I shouldn’t need training to hear the differences, they should be there for all to hear…

Go to any random cable site and you’ll see testimonials:

"The difference is immediately noticeable and astounding.... even more noticeable on speakers than on [stereo buss processors]. Anywhere you put them, it helps. Power cords are the filters of the future.”

“What can be expected from a Kaptovator is amazing realism to music from the highest highs to the lowest lows. THE KAPTOVATOR is honestly one of the best upgrades you can make to any great system component”

Also in regards to your concerns about the testing methodology, if there were issues with the setups should it not still be a level playing field? Meaning if my listening area is set up poorly (it probably is) then shouldn’t the power cable help improve it?

Keep in mind I’m not ‘dissing’ your pursuit here, just wondering why something as easy as ‘does it sound better’ can get so complex.

It's pretty simple to me. Not all audio gear makes and instant dramatic change (improved or otherwise) to the music. Many components make subtle changes and require time to listen to the different gear being tested to determine these subtle "improvements." The next thing to consider is, are these subtle improvements worth the money . . . I say yes because each improvement, subtle or not, is cumulative.

Great write up Ray! -

Raife, I don't see an obligitory arrow to the 'ears' on the cover, just eyes, nose, mouth.

And don't claim SET, single ended triode is what that means to 'us', get a new moniker, at least for consistency.

And don't claim SET, single ended triode is what that means to 'us', get a new moniker, at least for consistency.

It's easy to make a mountain out of a molehill, when people forget (or never realize to begin with) the simple objective of the hobby.

<again, in this order>

It's:

1. Listening to Music.

2. On the Hifi.

Pretty simple stuff (and fun) at the end of the day.

Cheers,

Russ

Russ, I agree with your high level view of the hobby. However, to me, the fun of this hobby is getting to "listening to music" "on the hifi" the journey of finding the right gear or combination thereof to get the enjoyment one likes while listening to music. Once that is achieved, then the real fun begins. . . listening to music. -

While admirable from a research perspective, as a consumer I dont see the point of this if I buy a product that claims an unmistakable audible benefit then that is what I would expect to hear. I shouldnt need to worry about sound waves bouncing off someones head and collapsing my stereophonic sound field. I shouldnt need training to hear the differences, they should be there for all to hear

If I go to tire dealer that speacializes in high end, high performance tires, I expect that I would see some advertizing posters for tires that claim "significant gas mileage improvement", "extreme braking and cornering enhancement", "outrageous reduction in road noise", etc., etc. Then, when I put a set of those high end tires on my old klunker, none of the manufacturer's claims are realized and I persecute them for selling snake oil tires.

Is the manufacturer really making bogus claims and selling snake oil tires? Maybe, maybe not. Whatever they are selling, they expect the consumer to know whether a particular tire is appropriate for their vehicle.

The same thing goes on in audio. People don't do the research required to properly match gear to their system, and then scream snake oil when the claimed effect is not realized. Sure, snake oil audio products exist, but careful and informed consumers know how to avoid such scams. Just use the same common sense you would use when buying anything else. Just because something does not work for you doesn't mean it is bogus.I shouldnt need training to hear the differences, they should be there for all to hear

I don't need training to enjoy playing golf. All I would be doing is hitting a little ball into a little hole with a stick...right? However, if I want to seriously persue golf as a hobby and get the most out of it, then I would need to invest in training, the proper gear, and the time required to educate myself about the hobby.Go to any random cable site and youll see testimonials:

"The difference is immediately noticeable and astounding.... even more noticeable on speakers than on [stereo buss processors]. Anywhere you put them, it helps. Power cords are the filters of the future.

What can be expected from a Kaptovator is amazing realism to music from the highest highs to the lowest lows. THE KAPTOVATOR is honestly one of the best upgrades you can make to any great system component

There is no one size fits all in any field of merchandise. Even when you see an article of clothing that claims "one size fits all" doesn't common sense tell you that the product is not going to fit people at the far ends of the size spectrum and those who have deformities?

Again, the consumer must use judgement and common sense. Some products are intended for high resolution audiophile systems.Also in regards to your concerns about the testing methodology, if there were issues with the setups should it not still be a level playing field? Meaning if my listening area is set up poorly (it probably is) then shouldnt the power cable help improve it?

No. Not necessarily. As I said, there is no one size fits all. Power cables are designed to primarily filter power line noise. If an audio system is full of other types of noise, or if the consumer's home has poor quality power, then the benefit offered by a high performance power cord may not be realized.Keep in mind Im not dissing your pursuit here, just wondering why something as easy as does it sound better can get so complex.

No diss was inferred. Some of the psychological, auditory and sensory science professionals I communicated with during the course of this reasearch were aware of the great audiophile debate. They thought it was ludicrous that people just can't find what sounds good to them and be done with it.Raife, I don't see an obligitory arrow to the 'ears' on the cover, just eyes, nose, mouth.

I think this was done on purpose...to keep them out of the great audiophile debates.:)It's easy to make a mountain out of a molehill, when people forget (or never realize to begin with) the simple objective of the hobby.

<again, in this order>

It's:

1. Listening to Music.

2. On the Hifi.

Pretty simple stuff (and fun) at the end of the day.

It's simple if your goal is the enjoyment of music. It's real complex if you have other motivations.And then you've got folks that couldn't tell the difference between a boom box and a top notch rig.

Unfortunately, these are the folks most likely to criticize audiophiles as lunatics and audiophile products as snake oil.Proud and loyal citizen of the Digital Domain and Solid State Country! -

DarqueKnight wrote: »1. An ABX (duo-trio balanced mode) test generally requires a subject population of at least 16 persons. Optimum subject population is at least 32 or more persons.

2. An ABX (duo-trio balanced mode) test which employs a subject size of less than 28 persons generates high rates of beta error (false negatives or "no differences between samples") in the results.

3. An ABX (duo-trio balanced mode) test must compare samples which are unknown (unfamiliar) to the test subjects.

4. An ABX (duo-trio balanced mode) test can use subjects that are untrained.

Criteria #3 and #4 are particularly troublesome as they allow subjects that are both untrained and unfamiliar with the product samples. Is it wise to accept advice from people unfamiliar with and untrained in the thing they are offering advice on? Is this good science? I don't think so.

Another troublesome point is that the ABX test is statistically inefficient because the chance of guessing a right answer is 50%.

In meetings most the day, but:

1)Correct, however this is entirely dependent on the alternative. If a person can correctly discern a difference 95% of the time, then you need less sample than if it's 75%

2)Incorrect. See above. They have made a generalization (which I'll back calculate later), but it's highly dependent on the alternative. You can have low beta at 16 with a high alternative.

3)Incorrect. The book is saying ABX is valid with samples which are unknown (unfamiliar) to the test subjects.

4)Correct.

Your conclusions are a bit odd. You state:

"In fact, "Sensory Evaluation Techniques" is widely regarded as the standard guide manual, across many scientific fields (psychology, food science, engineering, etc.), for conducting evaluative trials for sensory stimuli."

According to this book ABX testing is valid (with sufficient sample size) for conducting evaluative trials for sensory stimuli. So your research says one thing, yet you conclude the opposite.

The far better argument is simply that this book is not an appropriate source to answer your question. I was reading it for taste (was doing an RFP for a sports drink comparison), but I don't recall anything about sound testing. Why torture what's in the book to try to answer a different question?

Tell me more about this "guessing bias". I'm not familiar with the way in which you're using that phrase.Gallo Ref 3.1 : Bryston 4b SST : Musical fidelity CD Pre : VPI HW-19

Gallo Ref AV, Frankengallo Ref 3, LC60i : Bryston 9b SST : Meridian 565

Jordan JX92s : MF X-T100 : Xray v8

Backburner:Krell KAV-300i -

Maybe, depending on the system and its performance capabilities, but generally no. A system must be set up properly to hear and be able to distinguish many aspects of the reproductive capability of any piece of component.Meaning if my listening area is set up poorly (it probably is) then shouldn’t the power cable help improve it?

You might notice a slight increase in lower octave performance but if the system is not set up properly and you are not in the sweet spot, the changes will [most likely] not be detected, even to the most trained ear.~ In search of accurate reproduction of music. Real sound is my reference and while perfection may not be attainable? If I chase it, I might just catch excellence. ~ -

Thanks for the instructive feedback.In meetings most the day, but:

1)Correct, however this is entirely dependent on the alternative. If a person can correctly discern a difference 95% of the time, then you need less sample than if it's 75%

OK.2)Incorrect. See above. They have made a generalization (which I'll back calculate later), but it's highly dependent on the alternative. You can have low beta at 16 with a high alternative.

You may be right. I claim no expertise in sensory science. However, since the source of criterion 2 was from a book by three internationally known sensory scientists, and since I don't know you or what your academic and professional background is, I will go with them for now. I do realize that no one, regardless of the extent of their education or experience, is exempt from error. If you provide credible evidence that this is a generalization, I would accept and appreciate it as it helps understanding.3)Incorrect. The book is saying ABX is valid with samples which are unknown (unfamiliar) to the test subjects.

SET states on page 73:

"Use the balanced referenced mode if both samples are unknown or if untrained subjects are used."

I said:DarqueKnight wrote: »...for scientifically valid results:

3. An ABX (duo-trio balanced mode) test must compare samples which are unknown (unfamiliar) to the test subjects.

I don't see a discrepancy between what the book stated and my summary statement.4)Correct.

OK.Your conclusions are a bit odd. You state:

"In fact, "Sensory Evaluation Techniques" is widely regarded as the standard guide manual, across many scientific fields (psychology, food science, engineering, etc.), for conducting evaluative trials for sensory stimuli."

According to this book ABX testing is valid (with sufficient sample size) for conducting evaluative trials for sensory stimuli. So your research says one thing, yet you conclude the opposite.

SET teaches that ABX testing, like all sensory testing methods, is valid for some types of sensory stimuli (and situations) and inappropriate for others.

My point is that duo-trio reference mode (ABX) testing is inappropriate for audio gear testing because it puts the subject's (evaluator's) senses at a crippling disadvantage.The far better argument is simply that this book is not an appropriate source to answer your question. I was reading it for taste (was doing an RFP for a sports drink comparison), but I don't recall anything about sound testing. Why torture what's in the book to try to answer a different question?

Well, if you can't recall, you really can't say can you? I really can't see where I am "torturing" what is in the book. I can see where this thread might be "torture" for some, but, as Mr. Spock once said:

If there are self-made purgatories, then we all have to live in them."

All I am doing is evaluating a sensory test (ABX) with regard to its appropriateness for measuring audible differences in audio gear. Dr. Meilgarrd's sensory expertise was in the field of taste. However, the evaluative techniques in SET are applicable any of the five senses, which the book discusses in chapter 2. Again, if you have some evidence that this is not so, I will be happy to review such documentation.Tell me more about this "guessing bias". I'm not familiar with the way in which you're using that phrase.

I have already articulated my explanation rather well. Since you are a professional in the field, you should have no problem finding additional information. Indeed, I would expect you to expound further to us because of your expertise.Proud and loyal citizen of the Digital Domain and Solid State Country! -

It's not sensory science; it's statistics. The beta is a function of the alternative hypothesis, or what you believe the true rate at which people can correctly identify different test articles. The value of 16 subjects requires that rate to be around 80% or above to get the 20% beta that's the standard in scientific practice. A rate of 85% gives you a beta of 8% with 16 subjects. I've had the FDA tell one of my clients that their sample size was too large with a beta that small and to use LESS subjects.DarqueKnight wrote: »Thanks for the instructive feedback.

You may be right. I claim no expertise in sensory science. However, since the source of criterion 2 was from a book by three internationally known sensory scientists, and since I don't know you or what your academic and professional background is, I will go with them for now. I do realize that no one, regardless of the extent of their education or experience, is exempt from error. If you provide credible evidence that this is a generalization, I would accept and appreciate it as it helps understanding.

Seach binomial distribution and power- you can derive this yourself.

If the test article is unknown, you must use ABX. If the test article is known, you may use ABX. Your statement was that the samples MUST be unknown in an ABX test. This is not the case and furthermore, recommend the alternative is another blind test (one known, two unknowns).DarqueKnight wrote: »I don't see a discrepancy between what the book stated and my summary statement.

That's clearer, however see above. The evaluator can be familiar with the articles. There is no disadvantage.DarqueKnight wrote: »My point is that duo-trio reference mode (ABX) testing is inappropriate for audio gear testing because it puts the subject's (evaluator's) senses at a crippling disadvantage.

Well, you've got the book in front of you. Is there anything about audio in there?DarqueKnight wrote: »Well, if you can't recall, you really can't say can you?

I am completely unaware of "guessing bias" referring to an issue with someone thinking that they're being tricked by the ABX test. I've seen it refer to two things: 1) The 50% bump that you get since they have a 50/50 chance of guessing the outcome and 2)In visual acuity tests, people usually will guess at letters they can't actually see. "e" and "o" look similiar, so people will take a shot at it. Could you point me to where you saw "guessing bias" used that way?DarqueKnight wrote: »I have already articulated my explanation rather well. Since you are a professional in the field, you should have no problem finding additional information. Indeed, I would expect you to expound further to us because of your expertise.Gallo Ref 3.1 : Bryston 4b SST : Musical fidelity CD Pre : VPI HW-19

Gallo Ref AV, Frankengallo Ref 3, LC60i : Bryston 9b SST : Meridian 565

Jordan JX92s : MF X-T100 : Xray v8

Backburner:Krell KAV-300i -

Doesn't matter because...unc2701 wrote:The far better argument is simply that this book is not an appropriate source to answer your question...but I don't recall anything about sound testing.

I do , however, agree that a book is not an appropriate source to answer these questions.DarqueKnight= wrote:My point is that duo-trio reference mode (ABX) testing is inappropriate for audio gear testing because it puts the subject's (evaluator's) senses at a crippling disadvantage.~ In search of accurate reproduction of music. Real sound is my reference and while perfection may not be attainable? If I chase it, I might just catch excellence. ~ -

I had the privilage of spending three days at the AXPONA show here in Jacksonville. Many of the rooms had seating similar to this test (mutiple rows of mutliple seats). If I had enough patience I would eventully occupy the Sweet Spot in the room for a short time. I actually rotated through many positions and each was very different in their acoustics and sound. Only from the one position could the system be evaluated (and then only some were worth evaluating). Being unfamiliar with the systems was interesting but in each room the seat position made all the difference. Also sitting alone (happened occasionally) or with warm bodies close to your side completely changed the sound. The only room that I was pleased to sit in any seat was the VAC tube amps and King electrostatics. Seemed to produce an amazing soundstage even when directly behind someone. Still wondering about that one.

Rickintegrated w/DAC module Gryphon Diablo 300

server Wolf Alpha 3SX

phono pre Dynamic Sounds Associates Phono II

turntable/tonearms Origin Live Sovereign Mk3 dual arm, Origin Live Enterprise Mk4, Origin Live Illustrious Mk3c

cartridges Miyajima Madake, Ortofon Windfeld Ti, Ortofon

speakers Rockport Mira II

cables Synergistic Research Cables, Gryphon VPI XLR, Sablon 2020 USB

rack Adona Eris 6dw

ultrasonic cleaner Degritter -

Wearing my glasses changes the sound stage. What else would you expect with a couple of bodies next to you as well as being completely out of the sweet spot which can change within an inch or less on a properly dialed in system?~ In search of accurate reproduction of music. Real sound is my reference and while perfection may not be attainable? If I chase it, I might just catch excellence. ~

-

If the test article is unknown, you must use ABX. If the test article is known, you may use ABX. Your statement was that the samples MUST be unknown in an ABX test. This is not the case and furthermore, recommend the alternative is another blind test (one known, two unknowns).

Sir, you are causing me to doubt your reading comprehension. I am going to make a third and final attempt to clarify this point.

1. SET teaches (4th Ed., pages 72 and 73) that the duo-trio test comes in two (2) forms: constant reference mode and balanced reference mode.

2. The constant reference mode, WHICH IS NOT EQUIVALENT to ABX, "is used with trained subjects whenever PRODUCTS WELL KNOWN TO THEM can be used as the reference".

3. The balanced reference mode, WHICH IS EQUIVALENT to ABX, is used "if both samples are unknown or if untrained subjects are used".

Therefore, if the test article is known, duo-trio constant reference mode test is used, which, according to SET and other credible sources, IS NOT equivalent to ABX.

Please go back and re-read the direct quotes from SET, which are in bold blue type in post #48.Well, you've got the book in front of you. Is there anything about audio in there?

I have already stated that SET is a text about general sensory science. It briefly covers noise (pg. 11) and hearing (pgs. 21-22). It also discusses the other five human senses.

This is from the preference section:

The text is meant as a personal reference volume for food scientists, research and development scientists, cereal chemists, perfumers, and other professionals working in industry, academia, or government who need to conduct good sensory evaluation."

If you are making the argument that SET must be dismissed because it was not specifically written for audio, then you must also dismiss ABX testing because it was not specifically designed for audio.

You might be pleased to know that the following textbook, which I expect to arrive early next week, is specifically written for audio applications. The link takes you to the Amazon.com page for it. This book was recommended to me by a former research engineer with Lucent Technologies who was doing work in sound quality improvement for telephones and other personal communication devices.

"Perceptual Audio Evaluation-Theory, Method and Application"

The index for this book is viewable on its Amazon page and I see that ABX testing is covered on pages 317 and 318.I am completely unaware of "guessing bias" referring to an issue with someone thinking that they're being tricked by the ABX test. Could you point me to where you saw "guessing bias" used that way?

This was a point that came up during a conversation with a psychology academic. I would prefer to wait and expound further on this concept when I submit my research results to an appropriate journal. Please be patient until then.

Other Concerns

I would ask that you please stay on topic or I will have no choice but to report you to the management. The subject of this thread is twofold:

1. The ABX test referenced in the first post has numerous procedural flaws that rendered the test misleading, ineffective and unreliable.

2. ABX testing is not a scientifically valid test method for audio due to:

A. High error rates (high rate of false negatives with small subject population, 50% chance of guessing correct answer).

B. The requirement for untrained subjects (evaluators).

C. The requirement for unfamiliar test samples.

Those three disadvantages, coupled with the way ABX tests for audio are set up (e.g. subjects arranged in multi-person, multi-row seating, etc.) are compelling reasons to reject ABX testing for audio.

If you have some valid and credible information, whether complimentary or contradictory, pertaining to subjects 1 and/or 2 above, then I welcome your continued participation. Otherwise, if you want to discuss other topics, I ask that you start a separate thread. I'm sure there are others here who would love to discuss "binomial distribution and power".

One more thing: please refrain from profanity. It is against forum rules and could cause some adverse action to be taken against you by the management. In addition to this, it is unbecoming of an obviously well educated professional such as yourself. Thank you.Fair enough. I can't see for ****...Proud and loyal citizen of the Digital Domain and Solid State Country! -

Good morning audiophile brethren. I hope this letter finds you in good spirits and in the best of health. I just wanted to stop by and drop off a "package" pertaining to the application of sensory science techniques to sound quality.Note: Bold blue text is a direct quote from the peer-reviewed sensory science literature.

Gail V. Civille, one of the authors of "Sensory Evaluation Techniques" (SET) has done some work in the sensory evaluation of sound. From SET page 22:

"A summary of sensory methods applied to sound is given by Civille and Seltsam (2003)."

The full citation for the nine page paper by Civille and Seltsam was given at the end of chapter 2:

"Sensory evaluation methods applied to sound quality", Gail Vance Civille and Joanne Seltsam, Noise Control Engineering Journal, 51:4, 262 (2003)."

The Noise Control Engineering Journal is the peer-reviewed journal of the Institute of Noise Control Engineering (INCE). I purchased a copy of the Civille and Seltsam paper from the INCE website.

Abstract"Sensory Evaluation Methods Applied To Sound Quality"Sensory evaluation methods, applied for several decades to foods, personal care, home care and other consumer products are a prime resource for techniques when evaluating sound properties. The use of rigorous test protocols, careful selection of subjects, appropriate selection of sensory attributes, sophisticated univariate and multivariate statistical techniques all define effective sensory practices. Current sensory evaluation methods and practices are described with examples of practical application to sound quality. Examples of recent research that applies sensory strategy to measuring sound quality are provided. Further recommendations are made to encourage the use of these validated techniques in the measurement of descriptive, hedonic and quality measures for sounds."

Gail Vance Civille and Joanne Seltsam[Definition: hedonic - characterizing or pertaining to pleasure]

In this paper, in the section on sensory evaluation methods and their application to sound, Civille and Seltsam teach that discrimination testing methods (such as duo-trio reference mode (commonly known as ABX) are used to determine if product samples are different or similar to each other irrespective of pleasure or preference considerations (p. 264). Hence, I inferred that discrimination tests are not optimal evaluation tools for products intended for entertainment purposes (abstract and p. 268).

Civille and Seltsam teach that descriptive testing methods (such as preference tests and acceptance tests) are used when detailed sensory characteristics of a product need to be understood and documented (p. 266). Rigorous training of panelists (10-20) screened for specific skills is needed to perform descriptive testing.

A preference test is indicated if the evaluation is specifically designed to pit one product against another in situations such as product improvement or parity with competition (p. 268).

An acceptance test is indicated when it needs to be determined how well a product is liked. The product is compared to a well-liked product and a hedonic (pleasure inducement) scale is used to indicate degrees of unacceptable to acceptable, or dislike to like. From relative acceptance scores one can infer preference; the sample with the higher score is preferred (p. 268)."[/b][/color]

You might want to go back and review the last sentence of the abstract.:)

From SET pages 173-174:

"All descriptive analysis methods involve the detection (discrimination) and the description of both the qualitative and quantitative sensory aspects of a product by trained panels of 5-100 judges.

Panelists must be able to detect and describe the perceived sensory attributes of a sample.

Use descriptive tests to obtain detailed description of the aroma, flavor, and oral texture of foods and beverages, skinfeel of personal care products, handfeel of fabrics and paper products, and the appearance and sound of any product."

Revised Summary

In summary, for scientifically valid results:

1. An ABX (duo-trio balanced mode) must be used where statistical inefficiency can be tolerated as the chance of guessing a correct result is 50%.

2. An ABX (duo-trio balanced mode) test generally requires a subject population of at least 16 persons. Optimum subject population is at least 32 or more persons.

3. An ABX (duo-trio balanced mode) test which employs a subject size of less than 28 persons generates high rates of beta error (false negatives or "no differences between samples") in the results.

4. An ABX (duo-trio balanced mode) test must compare samples which are unknown (unfamiliar) to the test subjects.

5. An ABX (duo-trio balanced mode) test can use subjects that are untrained.

6. An ABX (duo-trio balanced mode) test is a form of discrimination test. Discrimination tests are not indicated when differences between the SOUND of two products, and particularly the level of pleasure induced by that SOUND is being evaluated (SET pp. 173-174, Civille and Seltsam (p. 268).

Criteria #4, and #5 are particularly troublesome as they allow subjects that are both untrained and unfamiliar with the product samples. Criterion #6 is troublesome for obvious reasons. Is it wise to accept advice from people unfamiliar with and untrained in the thing they are offering advice on? Is is wise to use a test for music reproduction equipment that is scientifically inappropriate for evaluating sonic differences in products? Is this good science? I don't think so. Do you?

This is what I have learned in the 1.5 weeks I have been researching this subject during my leisure hours.

Question: Every ABX test I have seen used in a music reproduction equipment evaluation achieved results statistically similar to guessing. In light of summary items 1-6 above, are you beginning to understand why?

More later...Proud and loyal citizen of the Digital Domain and Solid State Country!